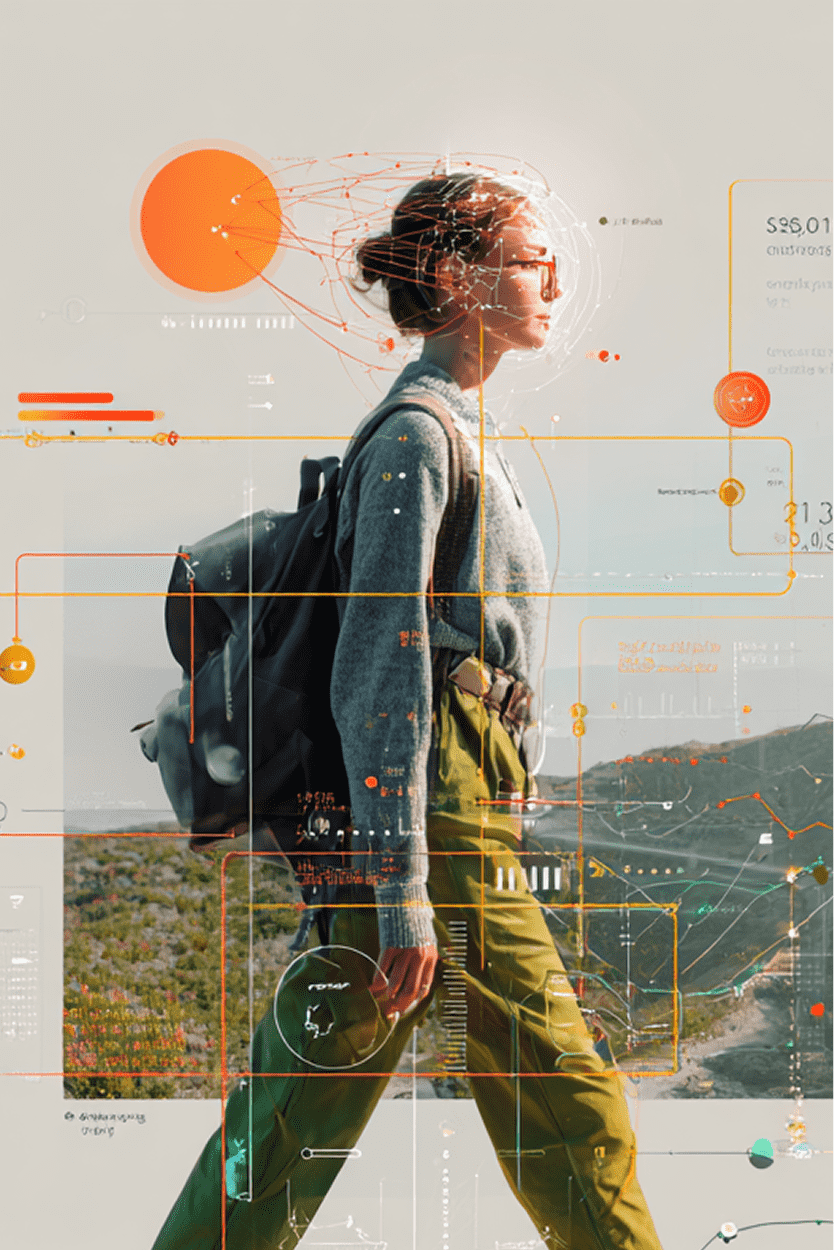

Cognitive UI: Designing Interfaces for the Mind

1. Introduction — Why Cognitive UI Matters Now

We stand at a design inflection point. Traditional digital interfaces — screens, buttons, menus — were built for computers. What if the next wave of interfaces needs to be built for human cognition?

As early as 2017 (and later redefined in 2019), I explored how Felt Value is not fixed, but experienced — shaped by emotion, memory, presence, and meaning. Through projects like Emotional Physics, I examined identities as evolving systems rather than static states: adaptive, decentralized, and seeking homeostasis, much like ecological systems, neural networks, DAOs, or cellular automata–style governance. If stable-state value defines structure, felt value is the signal — a human and machine-readable expression of context, sentiment, and lived experience.

Cognitive UI emerges from this foundation: an interface paradigm where both humans and AI contribute value signals, and systems optimize not just for efficiency or output, but for relational balance and collective well-being. As voice assistants (Siri, Alexa, ChatGPT/Claude voice) become increasingly ubiquitous, interfaces are no longer visual constructs alone.

They are cognitive experiences — how humans perceive, interpret, remember, and act on information provided by AI. This is what Local* Agency has discovered and named Cognitive UI.

2. What Is Cognitive UI?

Cognitive UI (Cog UI) isn’t just another UX buzzword. It’s a design philosophy and emerging field that:

- Prioritizes mental models over screen layouts

- Treats interface interaction as a cognitive process, not a navigation task

- Designs for learning styles, memory, semantic interpretation, emotion, and context

- Adapts dynamically to individual mental patterns and user preferences

At its core, a Cognitive UI system asks:

How does this interface look inside the user’s mind?

The text you see, here, in this very article has been Designed with Cog UI in mind. Formatting for the new *Agentic era means that each blog article produced with Local* Agency is accompanied by AEO, as well as AI-driven formatting patterns; how we* interpret information is shifting towards both human and AI interpretation.

3. How It Differs From Related Disciplines

Field Focus Limitations, Traditional UI/UX Visual interfaces and interactions often produce static and one-size-fits-all Cognitive Psychology patterns in UX. Human cognitive limitations & heuristics (Academic insight + limited practical frameworks) produce limited Voice UI & Conversational UI and dialogue-based interactions that are focused on syntax dialogue, not cognitive personalization. Adaptive/Contextual UX adapts interfaces towards their environment, only. This produces (mostly) rule-based UI/X, completely overlooking Cognitive UI.

Cognitive UI = Cognitive Science + Adaptive Interface + Voice/Multimodal Interaction.

4. The Human Side — Cognition First

A Cognitive UI redesigns the interface around:

Mental Models

People don’t read menus — they recall patterns. Cognitive UI maps to how users think, categorize, and anticipate interactions (as seen here within this blog article)

Learning Styles

- Visual learners prefer imagery and spatial relationships

- Auditory learners thrive with voice-first interfaces

- Kinesthetic learners benefit from pacing and feedback loops

Cognitive UI caters to learning style preferences in real time.

Memory & Cognitive Load

Cognitive UI designs interfaces that:

- Reduce memory friction,

- Leverage recognition over recall,

- Allow users to offload internal processing when needed.

5. Voice as a Cognitive Gateway

Voice interaction isn’t a modality — it’s a cognitive amplifier.

With tools like GPT/Claude voice:

- Interfaces can predict intention

- Conversations become contextual memories

- Users don’t navigate — they think and speak

This aligns with how humans naturally process information, not how machines layer menus.

6. Introducing “Parachute” — A First Step in Cognitive UI

In 2025, Local* Agency partnered with Parachute, an organization Founded by Aaron G Neyer, with Founding Engineering by Jon.Bo & Lucian Hymer. Parachute demonstrates and extends several core principles of Cognitive UI, namely:

- Voice-driven recording without (purely) menu navigation

- Transcription that aligns with thought patterns

- Memory recall and organization adapted to personal cognition

For Local* Agency, Parachute isn’t merely an app — it’s an experiment in cognitive interface design.

7. Principles of Cognitive UI

Local* Agency has begun to integrate and follow these principles when Designing with Cognitive UI:

- Mind-Centered Design

Interfaces must align with internal human schemas, not (only) external UI/X patterns. - Adaptive Personalization

UI should learn how the user thinks, not only what they do. - Multimodal Interaction (HCI)

Voice, gesture, vision, accessibility and contextual memory that are integrated, not siloed. - Cognitive Load Reduction

Information appears when needed, how it’s processed, not how it’s stored. - Semantic Continuity

Interfaces respond with meaning, not commands.

8. Real-World Examples (Existing + Emerging)

These are adjacent but not fully Cognitive UI:

- Smart voice assistants (Siri, Alexa)

- Predictive text and suggestion UIs

- Personalized news feeds

- Adaptive learning platforms

- AI assistants that recall prior context

What’s missing is the cognitive framing as a holistic design discipline.

Smart Voice Assistants (Siri, Alexa, Google Assistant)

What they do well:

- Translate natural language into commands

- Reduce friction for simple, repeatable tasks

- Offer hands-free, voice-first interaction

Where they fall short:

- Treat voice as an input method, not a cognitive interface

- Lack persistent understanding of how you think or reason

- Conversations reset instead of evolving with the user’s mental model

Cognitive UI gap:

A true Cognitive UI would adapt its responses based on how a user frames questions, remembers information, and revisits ideas over time — not just what was said last.

Predictive Text & Suggestion Interfaces

(Autocomplete, Smart Replies, AI writing assistants)

What they do well:

- Anticipate likely next words or actions

- Reduce effort and speed up execution

- Learn general linguistic patterns

Where they fall short:

- Optimize for probability, not intention

- Don’t distinguish between thinking styles (exploratory vs. decisive)

- Offer suggestions without understanding why a user is writing

Cognitive UI gap:

Cognitive UI would recognize whether a user is brainstorming, refining, or committing — and shift tone, pacing, and suggestions accordingly.

Personalized News Feeds & Content Algorithms

(Social feeds, newsletters, recommendation engines)

What they do well:

- Adapt content based on interests and behavior

- Reduce information overload at scale

- Surface “relevant” material

Where they fall short:

- Personalization is behavioral, not cognitive

- Reinforces consumption loops rather than learning patterns

- Optimizes engagement over comprehension

Cognitive UI gap:

A Cognitive UI feed would understand how a user absorbs information — scanning vs. deep reading, audio vs. visual — and adjust format and density accordingly.

Adaptive Learning Platforms

(Duolingo, Khan Academy, Coursera, tutoring AIs)

What they do well:

- Adjust difficulty based on performance

- Track progress over time

- Offer multiple content formats

Where they fall short:

- Adaptation is rule-based, not cognitive

- Focus on outcomes, not mental process

- Rarely change structure based on learning style or confusion signals

Cognitive UI gap:

Cognitive UI would detect hesitation, repetition, or conceptual drift — and reshape how concepts are explained in real time.

AI Assistants with Contextual Memory

(ChatGPT, Claude, Notion AI, agentic tools)

What they do well:

- Recall prior context and preferences

- Maintain conversational continuity

- Act as external cognitive support

Where they fall short:

- Memory is informational, not experiential

- Limited understanding of emotional or cognitive states

- Often reactive rather than anticipatory

Cognitive UI gap:

A Cognitive UI assistant wouldn’t just remember what you said — it would understand how you think, when you need structure, and when you need space to explore.

9. The Future of Human-Centered Interfaces

Cognitive UI opens doors to:

- Interfaces that think like humans

- AI that augments human memory and reasoning

- Systems that anticipate intention, not require instruction

- Tools that feel like extensions of thought

This is the next evolution — beyond screens, beyond clicks, into cognitive resonance.

10. Getting Started — A Cognitive UI Checklist

Designers and builders can begin here:

- Map the user’s mental model first: The Who Behind The Why, The How Behind the Who

- Prioritize voice and natural language interaction: Tone of Voice Work; get to know your clients, team, and users

- Adapt interfaces to individual learning/cognitive styles: build within a "System of Systems framework

- Use AI context for memory and prediction: The How Behind the Who resurfaces

- Measure cognitive load, not just task success: Accesibility is relative; the goal is not to overload users or their UI drastically shifts

Conclusion

Cognitive UI is a necessary expansion of how we* design digital systems for thinking, moving, interactive people. As AI becomes more conversational and immersive, the bottleneck won’t be screens — it will be cognition accessibility. Cognitive UI is helping Local* Agency innovate on how we frame, solve, and scale problems into the next era of "We* Are Agentic."

Design for the Mind, Not the Interface

If you’re building products for a voice-first, AI-powered future, Cognitive UI offers a new lens for how interfaces should work — and feel. Let’s explore how mind-centered design can shape the next generation of human–AI interaction.